Part II of a 3-part summary of a 2018 workshop on Superintelligence in SF. See also [Part I: Pathways] and [Part II: Failures].

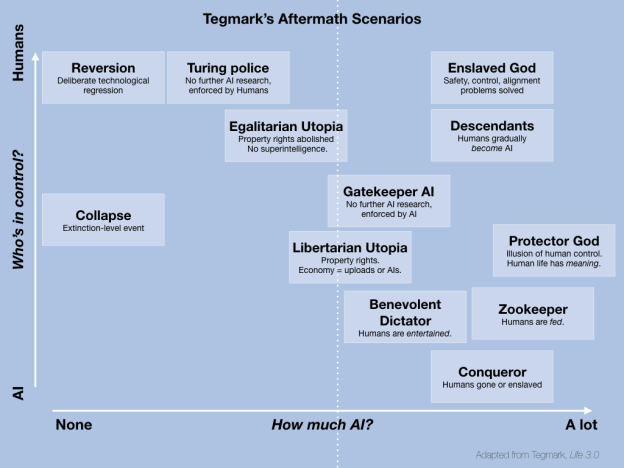

The third and final part of apocalyptic taxonomy describes the outcome, or aftermath, of the emergence and liberation of artificial superintelligence. The list of scenarios is taken from Tegmark (2018). In my introductory slide I tried to roughly order these scenarios along two axes, depending on the capability of the superintelligence, and the degree of control.

These scenarios are not clearly delineated, nor are they comprehensive. There is a longer description in Chapter 5 of Tegmark (2018). Another summary is at AI Aftermath Scenarios at the Future of Life Institute blog, where you can also find the results of a survey about which scenario to prefer.

Collapse

Intelligent life on Earth becomes extinct before a superintelligence is ever developed because civilisation brings about its own demise by other means than the AI apocalypse.

Reversion

Society has chosen deliberate technological regression, so as to forever forestall the development of superintelligence. In particular, people have abandoned and outlawed research and development in relevant technologies, including many discoveries from the industrial and digital age, possibly even the scientific method. This decision be in reaction to a previous near-catastrophic experience with such technology.

- Frank Herbert, Dune (novel 1965).

- Walter M. Miller, A Canticle for Leibowitz (novel 1960).

- Catherine Asaro, The Quantum Rose (novel 2000.

Turing Police

Superintelligence has not been developed, and societies have strict control mechanisms that prevent research and development into relevant technologies. This may be enforced by a totalitarian state using the police or universal surveillance.

Tegmark’s own label for this scenario is “1984,” which was universally rejected by the workshop.

- William Gibson, Neuromancer (novel 1984)

- Philip K. Dick, Do Androids Dream of Electric Sheep? (novel 1968, adapted as Blade Runner, film 1982), and Autofac (short story 1955).

Egalitarian Utopia

Society includes humans, some of which are technologically modified, and uploads.

The potential conflict arising from productivity differentials between these groups are avoided by abolishing property rights.

- Marshall Brain, Manna (novel/essay 2013).

- Ursula K. Le Guin, The Dispossessed (novel 1974).

- Charles Stross, Accelerando (novel 2005).

Libertarian Utopia

Society includes humans, some of which may be technologically modified, and uploads. Biological life and machine life have segregated into different zones. The economy is almost entirely driven by the fantastically more efficient uploads. Biological humans peacefully coexist with these zones, benefit from trading with machine zones; the economic, technological, and scientific output of humans is irrelevant.

- Marshall Brain, Manna (novel/essay 2013).

- Robin Hanson, The Age of Em (nonfiction 2016)

Gatekeeper AI

A single superintelligence has been designed. The value alignment problem has been resolved in the direction that the superintelligence has one single goal: to prevent the second superingelligence, and to interfere as little as possible with human affairs. This scenario differs from the Turing police scenario in the number of superintellinces actually constructed (0 versus 1) and need not be a police state.

- Alphaville (film 1965)

Descendants

The superintelligence has come about by a gradual modification of modern humans. Thus, there is no conflict between the factions of “existing biological humans” and “the superintelligence” – the latter is simply the descendant life form of the former. “They” are “we” or rather, “our children.” 21st century homo sapiens is long extinct, voluntarily, just as each generation of parents faces extinction.

- Harry Harrison, A Tale of the Ending (story in One Step from Earth, 1970).

- Arthur C. Clarke, The City and the Stars (novel 1956).

Enslaved God

The remaining scenarios all assume a superingelligence of vastly superhuman intellect. They differ in how much humans are “in control.”

In the Enslaved God scenario, the safety problems for developing superintelligence (control, value alignment) have been solved. The superingelligence is a willing, benevolent, and competent servant to its human masters.

Protector God

The superintelligence weilds significant power, but remains friendly and discreet, nudging humanity unnoticably into the right direction without being too obvious about it. Humans retain an illusion of control, their lives remaing challenging and feel meaningful.

- Ian Banks, The Culture series (novels 1987–2012)

- Zeroth law in Isaac Asimov’s Laws of Robotics.

Benevolent Dictator

The superintelligence is in control, and openly so. The value alignment problem is solved in humanity’s favour, and the superintelligence ensures human flourishing. People are content and entertained. Their lives are free of hardship or even challenge.

- The Matrix (film 1999).

- I, Robot (film 2004).

- WALL-E (film 2008).

Zookeeper

The omnipotent superintelligence ensures that humans are fed and safe, maybe even healthy. Human lives are comparable to those of zoo animals, they feel unfree, may be enslaved, and are significantly less happy that modern humans.

Conquerors

The superintelligence has not kept humans around. Humanity is extinct and has left no trace.

- Terminator (film franchise 1984–)

Oz

Workshop participants quickly observed the large empty space in the lower left corner! In that corner, no superintelligence has been developed, yet the (imagined) superintelligence would be in control.

- The Wizard of Oz (film 1930)

Other fictional AI tropes are out of scope. In particular the development of indentured mundane artificial intelligences, which may outperform humans in specific cognitive tasks (such as C3P0s language facility or many space ship computers), without otherwise exhibiting superior reasoning skills.